A week or two ago, a conversation with my girlfriend reminded me of this old video from the Defcon 18 Conference, in which a hacker by the name of Zoz recounts the tale of how he located and recovered his recently-stolen computer by way of some fancy Internet skills. In addition to being a great reminder about data safety and security, it’s a pretty damn funny story.

Re-watching the video reminded me I’ve always wanted to experiment with scripting the iSight camera on my MacBook Pro. Not necessarily for the purpose of having photo evidence in the event of a theft, but more as a fun exercise in scripting and automation. Maybe I’m easily entertained (rhetorical), but I found the process and results throughly enjoyable.

The first thing I needed was a command line interface to the iSight camera. Despite my best Googling efforts, I wasn’t able to find any native OS tools to fire the camera (I’m still not sure if there is one). But lucky for me, nearly every search I did pointed me to a free, no-longer-supported-but-still-functional CLI tool called iSightCapture. Installation is as simple as tossing it into /usr/local/bin.

Regarding syntax, here’s the pertinent information from the iSightCapture readme file:

isightcapture [-v] [-d] [-n frame-no] [-w width] [-h height] [-t jpg|png|tiff|bmp] output-filename

Options

-v output version information and exit

-d enable debugging messages. Off by default

-n capture nth-frame

-w output file pixel width. Defaults to 640 pixels.

-h output file pixel height. Defaults to 480 pixels.

-t output format - one of jpg, png, tiff or bmp. Defaults to JPEG.

Examples

$ ./isightcapture image.jpg

will output a 640x480 image in JPEG format

$ ./isightcapture -w 320 -h 240 -t png image.png

will output a scaled 320x240 image in PNG format

Triggering

I wanted the ability to remotely trigger the iSight camera from my phone, and have it run for a predefined interval of time. To do this, I’d use a watch folder and a trigger file, uploaded from my phone, to initiate the script. This would theoretically be the method I’d use to “gather photographic evidence” in the event my laptop is stolen.

As usual, to set this up, I turned to my trusty friend Dropbox. To keep things tidy, I needed a couple of folders. The directory structure looks like this:

The photos taken by the script will be placed in the top level directory, iSight_backup. The script itself would be placed in the scripts folder, and the trigger folder remains empty, awaiting a text file to be uploaded via Dropbox on my phone.

The Script

Here’s the script, called isbackup_t.bash, and saved into my iSight_backup/scripts/ folder:

#!/bin/bash

# path to iSightCapture CLI tool

APPP="/usr/local/bin/isightcapture"

# path to Dropbox folder to receive photos

FPATH="/Path/To/Dropbox/iSight_backup/"

# path to trigger file

TPATH="/Path/To/Dropbox/iSight_backup/trigger/"

# select photo filetype; jpg, png, tiff, or bmp

XTN=".jpg"

# number of photos to take

PNUM="24"

# interval between photos (seconds)

PINT="300"

for i in `seq 1 $PNUM`;

do

DTS=$(date -u +"%F--%H-%M-%S")

$APPP "$FPATH$DTS$XTN"

sleep $PINT

done

rm $TPATH*

The Details

Each photo gets a date and time stamp added to the file name for easy sorting. Since the FPATH destination is set to a folder within my Dropbox, and each file is a 640x480 jpeg, approximately 25kb in size, I can see the resulting photos on my phone just seconds after their taken.

By setting PNUM to 24, and PINT to 300, the script will take a photo every 5 minutes, for the next 2 hours, then stop until I upload another trigger file. However, since the script itself lives in Dropbox, I can change the interval or duration variables from my iOS text editor at any time.

Tying The Room Together

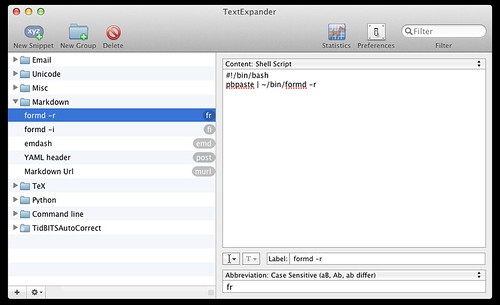

All that’s left to complete our little project is to create a launchd task to keep an eye on our trigger folder, and fire up the script if anything lands inside. Since creating, modifying, or using launchd tasks via any method other than a GUI is currently beyond me, I turned to Lingon.

Here’s the resulting launchd task:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Label</key>

<string>isbackup triggered</string>

<key>ProgramArguments</key>

<array>

<string>bash</string>

<string>/Path/To/Dropbox/iSight_backup/scripts/isbackup_t.bash</string>

</array>

<key>QueueDirectories</key>

<array>

<string>/Path/To/Dropbox/iSight_backup/trigger</string>

</array>

</dict>

</plist>

And just like that, we’ve got a setup that will monitor our trigger folder, fire up our script when it sees anything inside, and save each file to our Dropbox.

During my research for this project, I found a number of people using iSightCapture for things other than a makeshift security tool. Some, for example, used it to take a self portrait at login every day and auto-upload it to an online photo diary.

I thought that was a fun idea, so I wrote a second, shorter version of my script to automatically take a shot every 2 hours without the need for a trigger. For my version, however, I was most definitely not interested in auto-uploading the photos.

The script:

#!/bin/bash

APPP="/usr/local/bin/isightcapture"

FPATH="/Path/To/Dropbox/iSight_backup/"

DTS=$(date -u +"%F--%H-%M-%S")

XTN=".jpg"

$APPP "$FPATH$DTS$XTN"

And the launchd task:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Disabled</key>

<false/>

<key>KeepAlive</key>

<false/>

<key>Label</key>

<string>IS Secuirty</string>

<key>ProgramArguments</key>

<array>

<string>bash</string>

<string>/Path/To/Dropbox/iSight_backup/scripts/isbackup.bash</string>

</array>

<key>QueueDirectories</key>

<array/>

<key>RunAtLoad</key>

<true/>

<key>StartInterval</key>

<integer>7200</integer>

</dict>

</plist>

Since I typically use a multiple monitor setup when working, I almost never see the green light come on when the script runs and when I check the photo directory, usually the beginning of the next day, I’m treated to at least a couple hilarious images. Like these, for example: